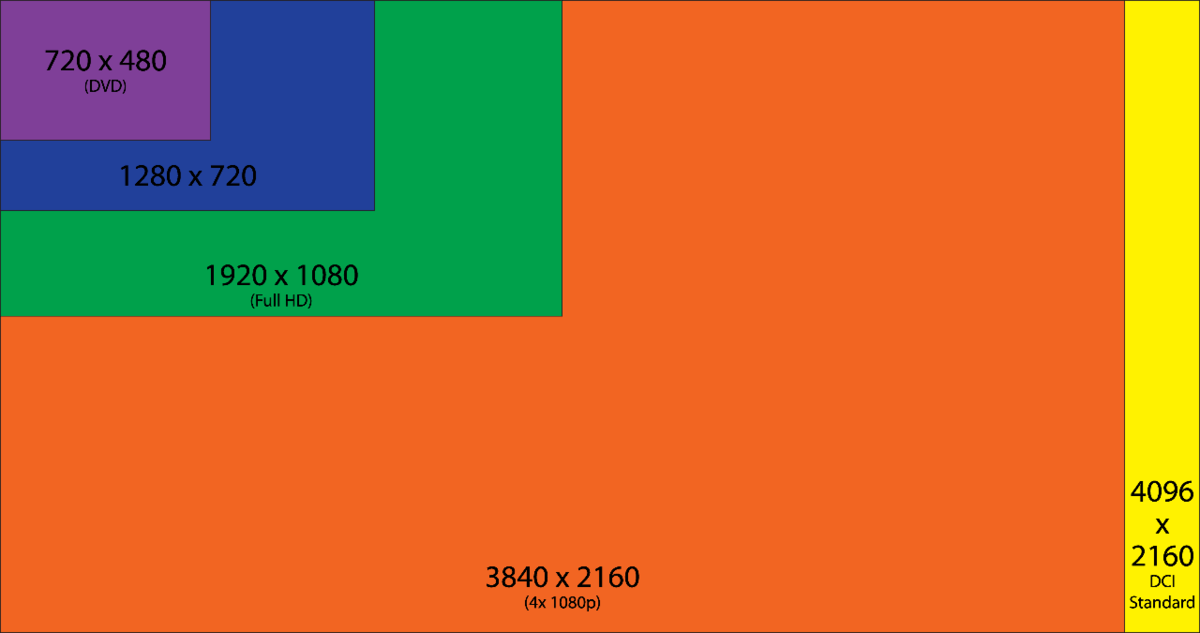

Ultra High Definition (UHD) or more colloquially known as 4K (though technically incorrect as 4K is defined as a resolution of 4096 x 2160) is one of the next big things coming to displays spanning from smartphones all the way to Television sets. It is the successor to HD or “1080p” and weighs in at a resolution of 3840 x 2160 or four times the resolution of HD. It has been a long time coming as 1080p has been the highest resolution for the majority of displays for well over a decade.

While 1080p may have been what the majority of people considered the highest resolution available, there is another higher resolution that hasn’t had the same kind of attention (mainly due to the fact that the highest resolution content available is running at 1080p). QHD or 1440p is an in-between resolution that runs at 2160 x 1440, which is exactly double the resolution of 1080p and half that of UHD/4K. It has been available as a display resolution long before the rise of UHD.

The problem with 1440p is that up until recently it has been largely restricted to computer monitors at or around $1000 and targeted at professional usage. With the new push for UHD, 1440p monitors have fallen in price to fit between HD and UHD screens. So why is this important? It’s all about pixel density.

![]()

Pixel density is the number of individual pixels that are squished into a certain set space, usually in Dots Per Inch or DPI. The closer together the pixels get, the sharper the perceived image becomes. If you think about reading text on a typical 1080p computer monitor vs something like a magazine it becomes very clear (pun accidentally intended) that the magazine is sharper and easier to read. This is because print media usually has a pixel density of at least 300DPI, while a computer display is usually around 72DPI.

So who does this matter to? Well, for that I will have to leave until Part 2 of my post in my usual fashion.